What was the most difficult part of the course? Anything you'd do differently?

Blogging:

The most difficult portion of this course was ... the blogging portion! While I am not very talented with coming up with new topics, I felt that keeping focused on one target topic was difficult to do even when I didn't have to go through and get sources! The challenge was more with having too much freedom and wondering how to be creative enough with pictures, videos, diagrams, etc.

While this was the difficult part, I would certainly not get rid of it or remove it from the course. I do believe that most folks coming up with technology are "gun-shy" from making posts like this online with their name tied to it. Everything on the internet is now permanent --meaning that these very sentences can be used to reference my name. So speaking with a lesser form of understanding on a topic or from a position/mindset can come back and give someone an opinion of us. That all being said, I believe that not doing this limits the creativity and willingness to address issues as they arise from a collaborative approach. I may not have the right direction or ability to fix something, but I could start talking with someone who has an idea that can move many readers in the right direction to solve security related issues. That is the beauty of blogging.

Capstone Assignment:

The capstone assignment was all around an interesting assignment that challenges students to overcome a typical 3rd party security consult for an organization. This semester, it made us think like we were on the job and working with an audience/customer that didn't know the whole world of cyber security. This required us to analyze everything as a IT person while presenting it as a marketer (knowing the audience, speaking at their level of understanding and verify they can interpret/ingest everything).

Overall, the project required knowledge of how to assess an environment, create visio diagrams, perform risk assessments, looks at lists of vulnerabilities, cost analysis, likelihood, etc. However, there was a good deal of overlap from the risk assessment course (in terms of the target case environment). I think that we should have had a different environment so the risk assessment tables would be different. While there were a couple changes, this was not enough to stimulate as much of a deeper dive. I also think that the client presentations should have some form of template. The assumption is that we are supposed to be able to provide some sort of consulting services, and I think a course that worked more to translate IT into the business world would be useful. Such as a half way course for integrating IT with a MBA-like or CIO role.

One thing that I wish we could change is the way discussion posts are done. I wish that there was a better way for us to communicate. I often times don't appreciate how shallow conversation posts are and I don't think they stimulate critical thinking for most folks. I wish that there were some sort of seminar hours where we could all get together on a web chat and talk with one another and the professor. I understand that the most prominent aspect of an online education is the flexibility, but perhaps do a chat sort of like the 'Marco Polo' application. This is where everyone is able to take and save/submit clips to perform a discussion in person over the course of a week. I think that would kill the redundancy and require folks to think more about cheesy posts.

Well, I believe this is my last post for my graduate degree =D

Saturday, February 23, 2019

Saturday, February 16, 2019

Week 10: Action Plans Galore

When looking at any system, most folks focus on the bad ugly parts that stick out. There is a certain level of shock and awe that really draws folks. I'm not sure if that is our way of coping with the failures of ourselves and others, or a means to distract us from the work ahead. Inevitably, the any sane person or organization will take those shortcoming and implement a fix to correct those issues, right? Actually, in my experience, this is where more folks shy away because it means work is required. Work that includes thinking or engineering a solution, spending money and time, and jeopardizing their stature if the solution fails and wastes all of the aforementioned. When I see folks shy aware on my projects, I gently remind them that on my staff I care more about folks that are willing to make mistakes (learn from them and pivot for another solution) rather than those that are too cautious and freeze. It is usually pretty easy to spot folks that will just burn those whole place down, but a workforce really needs folks that are able to take risks and think outside the box.

However, the most beautiful thing is to look at the problem and start engineering a solution! Even if there is a "top 10 solutions for (x) problems" guide out there, you are still implementing a solution for your environment. This means that at some point, sure varying levels of implementation, the team has to do SOMETHING to provide a fix for an issue. Most of the time, providing a fix for an issue is always a good thing, whether you a making a profit or providing protection. But as John Gibson states about half way into the video below, security is a Business Issue not an IT issue! This means that yes it will impact the business in some way. Decisions need to be made from ethical and financial standpoints that are outside the usual scope of the CTO.

So what does that mean for us "Action Planners"? It means that we need to be able to find solutions or options that vary in size, cost, scope, implementation, etc and provide that to the shot callers so that they can make the best decision for the organization. Sometimes we may lean towards one direction, but that doesn't mean that they are wrong. Much like not knowing the workload or priorities of other staff members, we don't know everything that they are weighing against up there around the board of directors table. However, from our level if we can take action, think, and provide a myriad of potential solutions in our action plans, then they are much more likely to make a sound decision for the betterment of the organization as a whole.

However, the most beautiful thing is to look at the problem and start engineering a solution! Even if there is a "top 10 solutions for (x) problems" guide out there, you are still implementing a solution for your environment. This means that at some point, sure varying levels of implementation, the team has to do SOMETHING to provide a fix for an issue. Most of the time, providing a fix for an issue is always a good thing, whether you a making a profit or providing protection. But as John Gibson states about half way into the video below, security is a Business Issue not an IT issue! This means that yes it will impact the business in some way. Decisions need to be made from ethical and financial standpoints that are outside the usual scope of the CTO.

Sunday, February 10, 2019

Week 9: Spectre and Meltdown!

Meltdown|Spectre: The physical vulnerability that emits OS level data

First and Foremost, we have to give this up to Google's Project Zero for finding and publicly posting this massive flaw. There is no doubt in my mind that they were also affected by the huge vulnerabilities, but even this massive Tech Giant felt that it was critical that the world new about Meltdown and Spectre. Not only were they identifying this, but there were telling the world exactly where to start looking because this is a whole new vector or perspective of vulnerabilities. Much like the first gold rush in the US, once one nugget was found ... it caused so many others to start looking for more!

Here is there post from January 3rd, 2018: https://googleprojectzero.blogspot.com/2018/01/reading-privileged-memory-with-side.html

So recently, I learned a good deal more about Spectre and Meltdown and I found it pretty fascinating. The overall process reminded me of a dumpster diving. At one point in time, folks didn't really concern themselves with what they threw away. They would throw away unopened credit card offers, bank statement that they finished reviewing, or even throw-away old cards/checks without disfiguring them beforehand.

On a bit more technical level, I found this site absolutely useful: https://www.csoonline.com/article/3247868/vulnerabilities/spectre-and-meltdown-explained-what-they-are-how-they-work-whats-at-risk.html

The overall gist here is that these side-channel techniques are able to access data that they don't have permissions to because the data is saved in several locations that are accessed faster/easier than the CPU is able to do a permissions check on.

There are two compute mechanisms at play here. The first is the CPUs ability to front load operations and data while it is waiting for another longer process to complete and tell it what to do.

For instance, when working on building a house we are waiting on supplies to be delivered before completing the framework on the doors in the master bedroom. These supplies can either come in as aluminum or wood. Regardless of which they come in as, we can still go through and measure out what the cuts would be (thicker cuts for wood and thinner for metal). When the materials arrive we would throw away the measures for the materials we aren't using right? But why did we go through this process? Well waiting for supplies to check measurements would take longer because we have to wait for the supply deliver. Figuring out the measurements for both scenarios beforehand is way faster and we can just discard the wrong measurements. Critically, before the supplies ever arrive at the jobsite, any person can come over and ask for both sets of measurements.

In these side-channeled memory attacks, when a process is running larger math problems, speculative execution is cramming in several scenarios of data calls that may or may not be used. And the data sets that aren't used are then offloaded improperly. Even while the process is waiting for the larger math problem to complete, a smaller/faster process may sweep in and ask about both data sets and aggregate enough information before the discard before the processor ever determines if the process has access to that data.

Week 8: audit process v. threat model

In my current course for learning how to analyze an environment and apply a security posture for the current vulnerabilities, threats, exposures, etc we have been working on how to create and apply threat models.

During the first few weeks we learned about threat models in general and how to create our own. Per usual, there were a couple industry standards out there; however, there was a point be driven... Threat models are constantly being updated and tweaked either for a specific project, a target audience, or because there a new vector to account for.

Initially, I thought the threat model was synonymous with the normal IT audit life cycle. The audit life cycle goes through and identifies assets/systems/processes to create a baseline. Then it will go through and analyze the state of each of those items to annotate the everything wrong. Then they will go through and generate a report about each of those with recommendations and submit that to the customers. Some of these reports will then show recommendations -- showing the same value vs likelihood vs risk exposure, etc.Then they will demonstrate how these items can be monitored over the length of time before the next audit.

So what I'm curious about is, which came first? The audit process or the de facto threat model? Will the audit process change if the de facto threat model changes? I assume so and it'll be a sweeping change with wide adoption.

I have learned that there are additional steps and tools that can be used to assist in providing this report. The culmination of these tools has altered the presentation at the end of this and perhaps there is the answer. Perhaps the audit is more geared towards the department level and operations while the threat model is more geared towards senior leadership and executives at the company.

The tools that we have used more for this course is like flow diagrams to show a very simplistic overview of how a system is or should operate. Working through STRIDE, to make it stick and be visually appealing is a great example.

|

| https://www.researchgate.net/publication/283205029/figure/fig6/AS:325314161987590@1454572348624/Relation-between-STRIDE-security-attributes-and-security-service-for-HTN.png |

Week 7: scripting

Week 7:

Programming Humor Meme of the week --

I've been told that my power shell cohorts don't appreciate my naming conventions. They prefer to use larger variable names that have something specifically tied to that script. I don't like that and I prefer to have it simplistic so I can focus on the functionality of what my scripts are doing. This meme kinda reminds me of that. It shows that someone out there likes to use their brackets on the site. Personally -- they're dumb and this is terrible >:-D but to each their own.

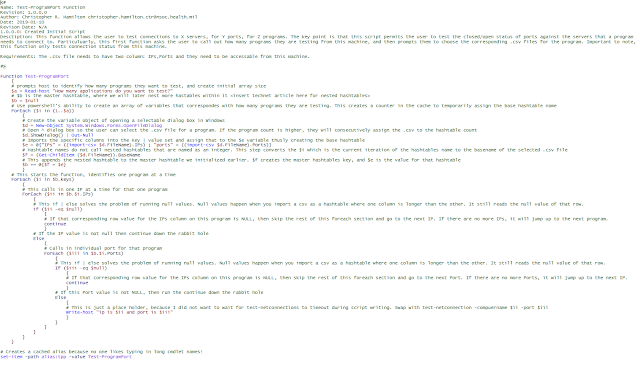

Here is a quick example of the format I use for my scripts (this was a teaching element I used for one of my teams on powershell's netsted hashtables ):

To me, the use of variables in a powershell script can sometimes lead the reader astray. If the reader needs some assistance with a line, that is what comments are for. When jumping through so many different scripting languages, I feel that it is easier to utilize a similar format across them all for familiarity.

Powershell is important because it is the new Microsoft language that can fully interact with everything internally. They are gearing themselves to an operating system that can be manually operated through a user-friendly language. Whats more is that they permit users to create their own cmdlets, functions, modules, and packages.

How does this tie into security? How about the PowerShell Empire? This was a site that started the push for using powershell to get information about local/remote system to help test security mechanisms. While the original site is no longer maintained (http://www.powershellempire.com/?page_id=110), there is a github for PowerShellEmpire 3 (https://github.com/EmpireProject/Empire/wiki/Quickstart).

Powershell Empire 3 from github is this massive module that has tons of powerhell variants for things like code execution, gathering credentials, exploitation, ex-filtration, recon, and much more. Here is a more recent how to video using it:

Programming Humor Meme of the week --

I've been told that my power shell cohorts don't appreciate my naming conventions. They prefer to use larger variable names that have something specifically tied to that script. I don't like that and I prefer to have it simplistic so I can focus on the functionality of what my scripts are doing. This meme kinda reminds me of that. It shows that someone out there likes to use their brackets on the site. Personally -- they're dumb and this is terrible >:-D but to each their own.

Here is a quick example of the format I use for my scripts (this was a teaching element I used for one of my teams on powershell's netsted hashtables ):

To me, the use of variables in a powershell script can sometimes lead the reader astray. If the reader needs some assistance with a line, that is what comments are for. When jumping through so many different scripting languages, I feel that it is easier to utilize a similar format across them all for familiarity.

Powershell is important because it is the new Microsoft language that can fully interact with everything internally. They are gearing themselves to an operating system that can be manually operated through a user-friendly language. Whats more is that they permit users to create their own cmdlets, functions, modules, and packages.

How does this tie into security? How about the PowerShell Empire? This was a site that started the push for using powershell to get information about local/remote system to help test security mechanisms. While the original site is no longer maintained (http://www.powershellempire.com/?page_id=110), there is a github for PowerShellEmpire 3 (https://github.com/EmpireProject/Empire/wiki/Quickstart).

Powershell Empire 3 from github is this massive module that has tons of powerhell variants for things like code execution, gathering credentials, exploitation, ex-filtration, recon, and much more. Here is a more recent how to video using it:

Subscribe to:

Comments (Atom)